Company: Top Hat | Role: Senior Product Designer | Timeline: ~1year | Team: PM, Data Scientist, Software Engineers (3)

Project Overview

Students face growing pressure to learn effectively, yet many lack personalized support. We introduced Ace Study Assistant, an AI-powered chat for on-demand help, but adoption lagged behind its potential.

Goal:

Increase adoption by showing Ace’s value and integrating it seamlessly into student study habits.

Focus Areas:

- Highlight the benefits of AI-powered learning

- Create smooth pathways into daily use

- Refine features using data-driven insights

- Drive growth through ongoing experimentation

My Role

I led the design for Ace Study Assistant’s adoption strategy, collaborating with my product manager, engineering, research, data science, learning science, and marketing. I partnered closely with engineering through development and iteration to align user needs with technical feasibility.

User Research

With limited insights into how students engage with AI tools, I partnered with our user researcher to uncover perceptions and needs.

Key Findings:

- Students valued quick, reliable help that simplified complex concepts.

- Many were hesitant, concerned about accuracy and trust.

- They wanted clear proof of how Ace supported their academic goals.

These insights shaped our prototypes and guided targeted experiments to refine Ace’s experience.

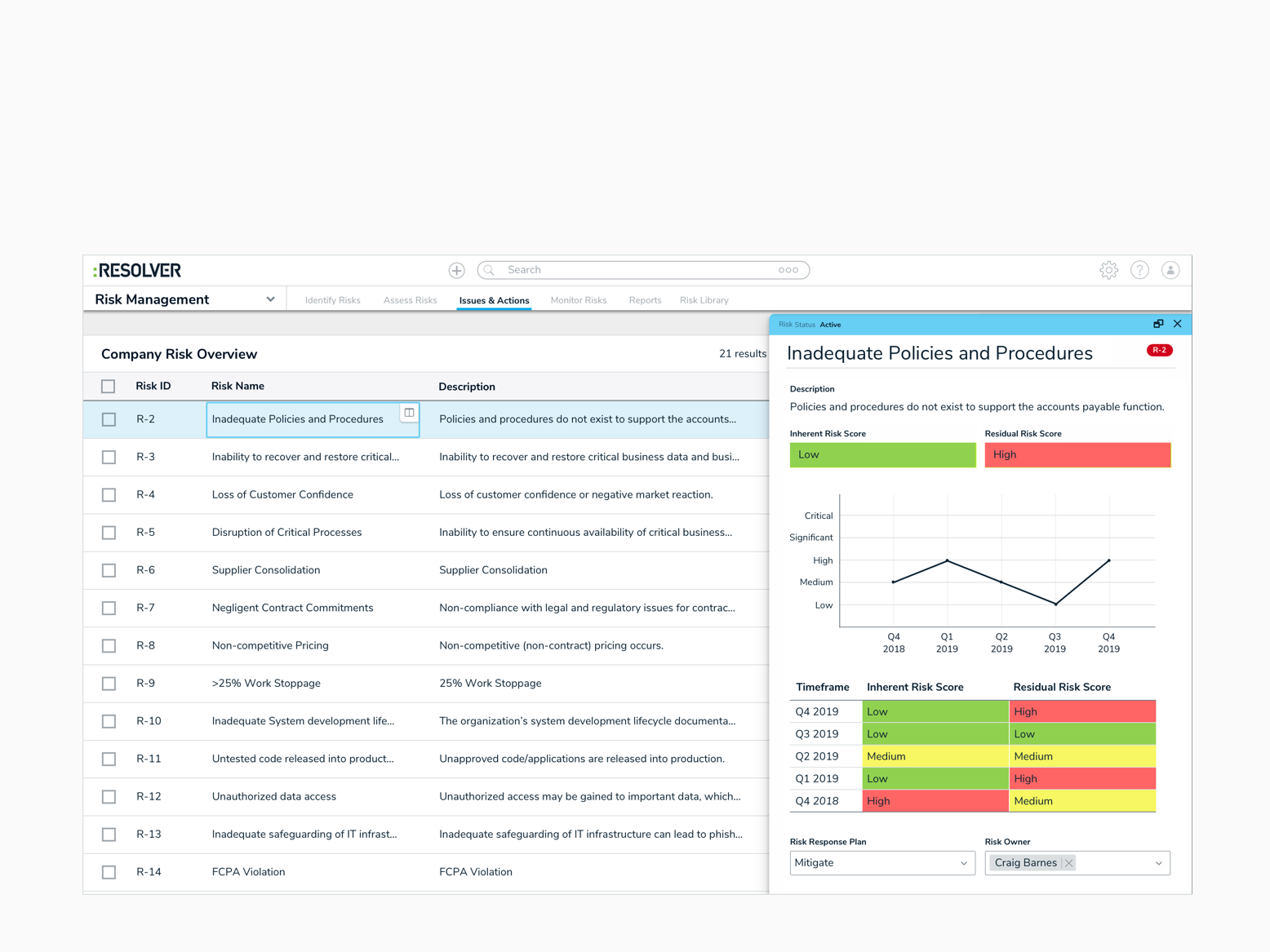

Design Experiments

I led a series of experiments to make Ace Study Assistant more approachable, useful, and integrated into study workflows. Each experiment tested hypotheses on what would drive adoption and engagement.

Experiment 1: Smart Defaults

We added four quick-action options (e.g., Question Help) to help students immediately understand Ace’s value. Iterative refinements based on behavior boosted engagement. For example, replacing the low usage option 'Fun Fact' with 'Quiz Me' aligned with how students were already studying and drove higher usage.

Comparison of Ace Study Assistant before and after implementing smart defaults, designed to highlight the tool’s value to users.

Ace Study Assistant in context within Top Hat

Experiment Results

The results showed that 6% of users had Smart Defaults as their first interaction with Ace, and there was a 30% increase in messages sent per user when Smart Defaults were available. However, while Smart Defaults boosted engagement, they had little impact on activation, as 94% of users who interacted with them were already familiar with Ace. This underscored the ongoing challenge of low discoverability, prompting our next experiment to focus on improving Ace’s visibility.

Experiment 2: Ask Ace from Highlights

Highlighting is one of the most frequently used features in Top Hat, so we leveraged it as a natural entry point into Ace. By enabling students to interact with Ace based on their highlighted content, we seamlessly connected their existing study habits to Ace’s capabilities. Additionally, we introduced smart options, such as “Simplify Language,” directly from the highlighted text to provide quicker assistance.

Highlight toolbar with the Ask Ace action menu expanded

Experiment Results

This experiment proved highly effective in driving adoption, resulting in a 40% increase in usage. It highlighted the importance of utilizing and optimizing high-traffic sections of the app to improve discoverability and increase conversions, ultimately helping us achieve our usage goals.

Experiment 3: Improving Accessibility & Onboarding

Ace’s placement in the toolbar limited visibility, so we introduced a dedicated CTA button to draw attention and boost engagement. We also simplified the start chat screen, enabling faster, easier onboarding. These changes made Ace more discoverable, approachable, and effective in driving first-time use.

Comparison of Ace Study Assistant's entry point before and after.

Ace in context, showing the dedicated button and improved start chat screen.

Experiment Result

Adoption increased by approximately 35% with the new dedicated button compared to the original sidebar button.

Experiment 4: Just-In-Time Nudges

To proactively engage students, we implemented contextual nudges that expanded from Ace’s entry point.

These nudges included:

- Due Date Nudge: Encouraging students to ask Ace for help when an assignment was due soon.

- Incorrect Answer Nudge: Offering assistance when a student submitted an incorrect answer.

- Page Summarization Nudge: Suggesting Ace’s help with summarizing textbook pages.

Incorrect Answer Nudge

Incorrect Answer Nudge in context

Experiment Results

The incorrect answer nudge, in particular, was extremely successful in driving engagement, as it directly addressed a moment of need. During the experiment, the nudge resulted in an 18.18% increase in engagement and a 19.92% boost in conversion rates. Notably, 24.15% of new Ace users during this period were attributed to the nudge, and 63.75% of users who converted through the nudge had never used Ace before.

Iterative Approach

We designed and shipped each experiment within a sprint, rapidly iterating or pivoting based on results. This agile process let us learn in real time and continuously improve the user experience.

Impact

Before these improvements, Ace Study Assistant had an activation rate of 1.81%, with 5,859 active students (defined as those who sent Ace two or more messages). After the enhancements, the activation rate increased significantly to 10.0%, representing 32,448 active students.